I have a Development site and a Live site on Umbraco Cloud. You might have some additional environments but in theory these steps should be more or less the same. This is just a guide that hopefully helps you, it’s by no means a fail-safe step by step guide, you’ll probably run into some other issues and edge cases that I didn’t. You will also need access to Kudu since you will most likely need to delete some left over files manually, you will probably also need to toggle debug and custom errors settings in your web.config to debug any YSODs you get along the way, you will need to manually change the Umbraco version number in the web.config during the upgrade process and you might need access to the Live Git repository endpoint in case you need to rollback.

… Good luck!

Make sure you can upgrade

Make sure you have no Obsolete data types

You cannot upgrade to v8 if you have any data types referencing old obsolete property editors. You will first need to migrate any properties using these to the non-obsolete version of these property editors. You should do this on your Dev (or lowest environment): Go to each data type and check if the property editor listed there is prefixed with the term “Obsolete”. If it is you will need to change this to a non-obsolete property editor. In some cases this might be tricky, for others it might be an easy switch. For example, I’m pretty sure you can switch from the Obsolete Content Picker to the normal Content Picker. Luckily for me I didn’t have any data relying on these old editors so I could just delete these data types.

Make sure you aren’t using incompatible packages

If you are using packages, make sure that any packages you are using also have a working v8 version of that package.

Make sure you aren’t using legacy technology

If you are using XSLT, master pages, user controls or other weird webforms things, you are going to need to migrate all of that to MVC before you can continue since Umbraco 8 doesn’t support any of these things.

Ensure all sites are in sync

Very important that all Cloud environments are in sync with all of your latest code and there’s no outstanding code that needs to be shipped between them. Then you need to make sure that all content and media are the same across your environments since each one will eventually be upgraded independently and you want to use your Dev site for testing along with pulling content/media to your local machine.

Clone locally, sync & backup

Once all of your cloud sites are in sync, you’ll need to clone locally – I would advise to start with a fresh clone. Then restore all of your content and media and ensure your site runs locally on your computer. Once that’s all running and your site is basically running and operating like your live site you’ll want to take a backup. This is just for piece of mind, when upgrading your actual live site you aren’t going to lose any data. To do this, close VS Code (or whatever tool you use to run your local site) and navigate to ~/App_Data/ and you’ll see Umbraco.mdf and Umbraco_log.mdf files. Make copies of those and put them someplace. Also make a zip of your ~/media folder.

Now to make things easy in case you need to start again, make a copy of this entire site folder which you can use for the work in progress/upgrade/migration. If you ever need to start again, you can just delete this copied wip folder and re-copy the original.

Create/clone a new v8 Cloud site

This can be a trial site, it’s just a site purely to be able to clone so we can copy some files over from it. Once you’ve cloned locally feel free to delete that project.

Update local site files

Many people will be using a visual studio web application solution with Nuget, etc… In fact I am too but for this migration it turned out to be simpler in my case to just upgrade/migrate the cloned website.

Next, I deleted all of the old files:

- The entire /bin directly – we’ll put this back together with only the required parts, we can’t have any old left over DLLs hanging around

- /Config

- /App_Plugins/UmbracoForms, /App_Plugins/Deploy, /App_Plugins/DiploTraceLogViewer

- /Umbraco& /Umbraco_Client

- Old tech folders - /Xslt, /Masterpages, /UserControls, /App_Browsers

- /web.config

If you use App_Code, then for now rename this to something else. You will probably have to refactor some of the code in there to work and for now the goal is to just get the site up and running and the database upgraded. So rename to _App_Code or whatever you like so long as it’s different.

Copy over the files from the cloned v8 sites:

- /bin

- /Config

- /App_Plugins/UmbracoForms, /App_Plugins/Deploy

- /Umbraco

- /Views/Partials/Grid, /Views/MacroPartials, /Views/Partials/Forms– overwrite existing files, these are updated Forms and Umbraco files

- /web.config

Merge any custom config

Create a git commit before continuing.

Now there’s some manual updates involved. You may have had some custom configuration in some of the /Config/* files and in your /web.config file. So it’s time to have a look in your git history. That last commit you just made will show all of the changes overwritten in any /config files and your web.config file so now you can copy any changes you want to maintain back to these files. Things like custom appSettings, etc…

One very important setting is the Umbraco.Core.ConfigurationStatus appSetting, you must change this to your previous v7 version so the upgrader knows it needs to upgrade and from where.

Upgrade the database

Create a git commit before continuing.

At this stage, you have all of the Umbraco files, config files and binary files needed to run Umbraco v8 based on the version that was provided to your from your cloned Cloud site. So go ahead and try to run the website, with any luck it will run and you will be prompted to login and upgrade. If not and you have some YSODs or something, then the only advise I can offer at this stage is to debug the error.

Now run the upgrader – this might also require a bit of luck and depends on what data is in your site, if you have some obscure property editors or if your site is super old and has some strange database configurations. My site is super old, from v4 and over the many years I’ve managed to wrangle it through the version upgrades and it also worked on v8 (after a few v8 patch releases were out to deal with old schema issues). If this doesn’t work, you may be prompted with a detailed error specifically telling you way (i.e. you have obsolete property editors installed), or it might just fail due to old schema problems. For the latter problem, perhaps some of these tickets might help you resolve it.

When you get this to work, it’s a good time to make a backup of your local DB. Close down the running website and tool you used to launch it, then make a backup of the Umbraco.mdf and Umbraco_log.mdf files.

Fix your own code

You will probably have noticed that the site now runs, you can probably access the back office (maybe?!) but your site probably has YSODs. This is most likely because:

- Your views and c# code needs to be updated to work with the v8 APIs (remember to rename your _App_Code folder back to App_Code if you use it!)

- Your packages need to be re-installed or upgraded or migrated into your new website with compatible v8 versions

This part of the migration process is going to be different for everyone. Basic sites will generally be pretty simple but if you are using lots of packages or custom code or a visual studio web application and/or additional class libraries, then there’s some manual work involved on your part. My recommendation is that each time you fix part of your sites you create a Git commit. You can always revert to previous commits if you want and you also have a backup of your v8 database if you ever need to revert that too. The API changes from v7 –> v8 aren’t too huge, you’ll have your local site up and running in no time!

Rebuild your deploy files

Create a git commit before continuing.

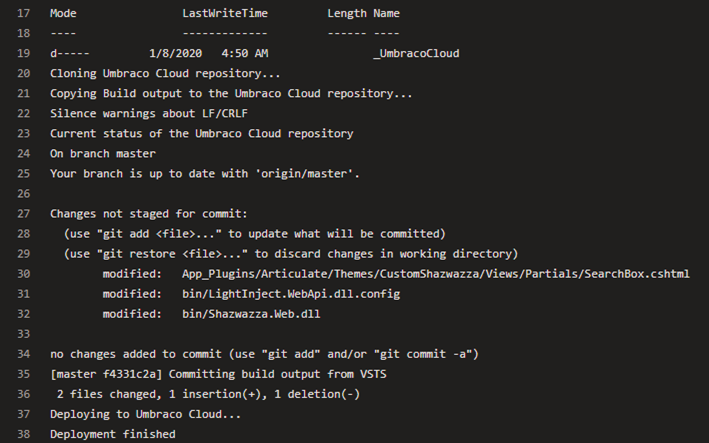

Now that your site is working locally in v8, it’s time to prep everything to be sent to Umbraco Cloud.

Since you are now running a newer version of Umbraco deploy you’ll want to re-generate all of the deploy files. You can do this by starting up your local site again, then open the command prompt and navigate to /data folder of your website. Then type in :

echo > deploy-export

All of your schema items will be re-exported to new deploy files.

Create a git commit before continuing.

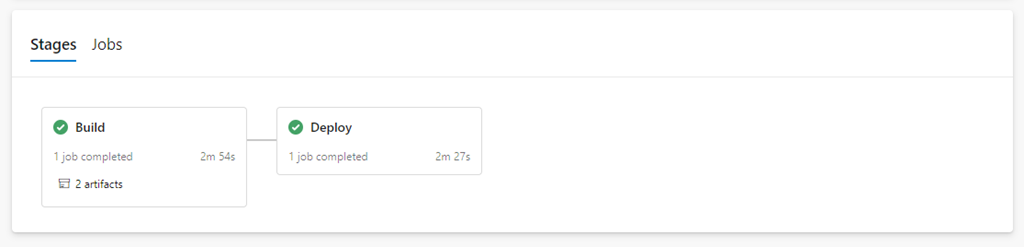

Push to Dev

In theory if your site is working locally then there’s no reason why it won’t work on your Dev site once you push it up to Cloud. Don’t worry though, if all else fails, you can always revert back to a working commit for your site.

So… go ahead and push!

Once that is done, the status bar on the Cloud portal will probably be stuck at the end stage saying it’s trying to process Deploy files… but it will just hang there because it’s not able to. This is because your site is now in Upgrade mode since we’ve manually upgraded.

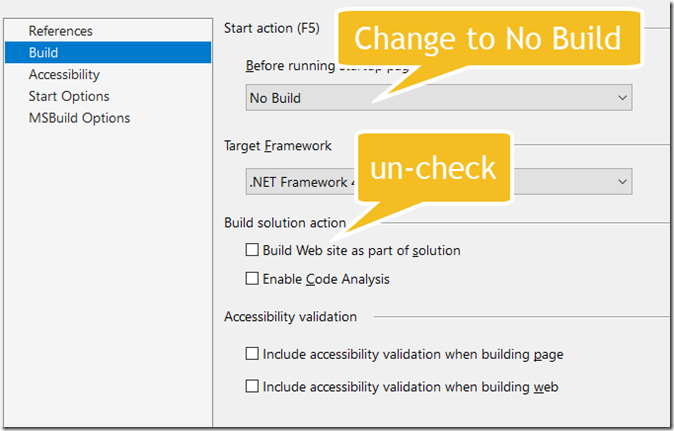

At this stage, you are going to need to login to Kudu. Go to the cmd prompt and navigate to /site/wwwroot/web.config and edit this file. The Umbraco.Core.ConfigurationStatus is going to be v8 already because that is what you committed to git and pushed to Cloud but we need Umbraco to detect an upgrade is required, so change this value to the v7 version you originally had (this is important!). While you are here, ensure that debug = false and CustomErrors = Off so you can see any errors that might occur.

Now visit the root of the site, you should be redirected to the login screen and then to the upgrade screen. With some more luck, this will ‘just work’!

Because the Deploy files couldn’t be processed when you first pushed because the site was in upgrade mode, you need to re-force the deploy files to be processed so go back to kudu cmd prompt and navigate to /site/wwwroot/data and type in:

echo > deploy

Test

Make sure your dev site is working as you would expect it to. There’s a chance you might have missed some code that needs changing in your views or other code. If that is the case, make sure you fix it first on your local site, test there and then push back up to Dev and then test again there. Don’t push to a higher environment until you are ready.

Push to Live

You might have other environments between Dev and Live so you need to follow the same steps as pushing to Dev (i.e. once you push you will need to go to Kudu, change the web.config version, debug and custom error mode). Pushing to Live is the same approach but of course your live site is going to incur some downtime. If you’ve practiced with a Staging site, you’ll know how much downtime to expect, in theory it could be as low as a couple minutes but of course if something goes wrong it could be for longer.

… And Hooray! You are live on v8 :)

Before you go, there’s a few things you’ll want to do:

- log back into kudu on your live site and in your web.config turn off debug and change custom errors back to RemoteOnly

- be sure to run “echo > deploy”

- in kudu delete the temp file folder: App_Data/Temp

- Rebuild your indexes via the back office dashboard

- Rebuild your published caches via the back office dashboard

What if something goes wrong?

I mentioned above that you can revert to a working copy, but how? Well this happened to me since I don’t follow my own instructions and I forgot to get rid of the data types with Obsolete property editors on live which means all of my environments were not totally synced before I started since I had fixed that on Dev. When I pushed to live and then ran the upgrader, it told me that I had data types with old Obsolete property editors … well in that scenario there’s nothing I could do about it since I can’t login to the back office and change anything. So I had to revert the Live site to the commit before the merge from Dev –> Live. Luckily all database changes with the Upgrader are done in a transaction so your live data isn’t going to be changed unless the upgrader successfully completes.

To rollback, I logged into Kudu and on the home page there is a link to “Source control info” where you can get the git endpoint for your Live environment. Then I cloned that all down locally and reverted the merge, committed and pushed back up to the live site. Now the live site was just back to it’s previous v7 state and I could make the necessary changes. Once that was done, I reverted my revert commit locally and pushed back to Live, and went through the upgrade process again.

Next steps?

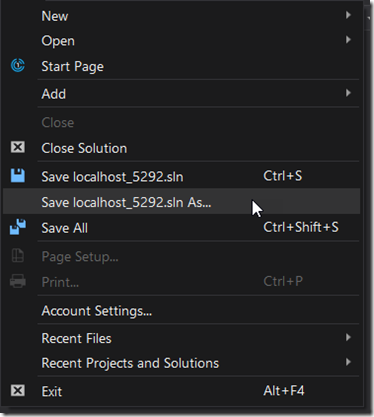

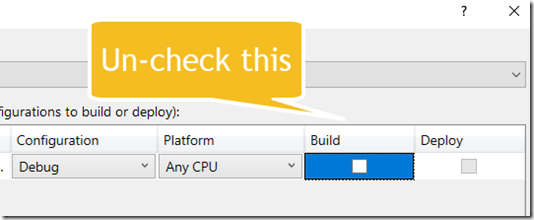

Now your site is live on v8 but there’s probably more to do for you solution. If you are like me, you will have a Visual Studio solution with a web application to power your website. I then run this locally and publish to my local file system – which just happens to be the location of my cloned git repo for my Umbraco Cloud Dev site, then I push those changes to Cloud. So now I needed to get my VS web application to produce the same binary output as Cloud. That took a little bit to figure out since Umbraco Cloud includes some extra DLLs/packages that are not included in the vanilla Umbraco Cms package, namely this one: “Serilog.Sinks.MSSqlServer - Version 5.1.3-dev-00232” so you’ll probably need to include that as a package reference to your site too.

That’s about as far as I’ve got myself, best of luck!